20140603 TerraHertz http://everist.org NobLog home Updated: 20190101

The history of technology can be fascinating. Technological history is often ignored by so-called historians, who concentrate on political and social developments. Yet politics and social systems can be considered constructs of deception and often unfounded, even irrational beliefs. Whereas technological history is an evolution of practical, engineering-based truths. Or failures in some cases. Yet the underlying intentions were usually to produce something that worked as intended, based on sound reasoning and scientific theory, with a clearly and honestly defined purpose.

It can be argued that technology predominantly steers political history, both via the results of weaponising of technology, and the social changes enabled by technology. In that sense, is not Technological History therefore as or even more important than the resulting Political History? Or at least more fundamental. Personally I find it much more interesting, and so quite saddening to see details of technological history being lost, discarded without thought as 'obsolete' trivia.

It is said those who forget the past are doomed to relive it. Since the dawn of the Industrial Revolution we have been riding a continuous wave of technological development, and we are not widely aware of any precedent of humankind having forgotten such knowledge. So we assume regression can't happen and there is no need to carefully preserve old technological knowledge.

But there have been relatively high technology periods in human history that are now entirely forgotten. For instance, who produced the Antikythera Mechanism[1] and how? This highly sophisticated precision-machined mechanical computing device, built around 150 BC, simply does not fit in our understanding of history at all. Also the Baghdad Batteries and who used them, for what?[2] Another loss within only the last thousand years, was the method of creating Damascus Steel.[3]

All knowledge of such devices and probably other past technologies, has been lost. Leaving only mysterious ancient relics, sometimes with no social context whatsoever. How can we be sure that in another thousand years, people won't be mystified when finding a calcified smartphone, and wondering who produced it, how, and what it was for?

In modern times, appalling loss of technological knowledge continues. These days we don't (usually) burn libraries, they merely get progressively purged of irreplaceable old technical books, into dumpsters in the rear lane. The entire theoretical works of the genius Nikola Tesla[4] in the latter stages of his life are lost (or at least vanished from public view), Philo Farnsworth's work in the 1960s on nuclear Fusors[5] is mostly gone, and the majority of all books published through the middle years of the 20th Century are well on their inevitable path to illegible dust due to acid-paper disintegration[6].

More recently it's become fashionable to dispose of all technical data books and manuals from the early days of the electronics revolution, because "who needs that bulky old stuff? It's obsolete, and all we need is online now."

As a species, we don't seem to have much common sense, caution, or ability to take lessons from history. It's not impossible to imagine circumstances where the 'old' technical knowledge might become critically useful again. Archaeologists will tell you that it's the rule, not exception, for civilizations to eventually collapse. Also that social complexity is a primary causative factor in such collapses, and that the process is always unexpected and far from pleasant.[7] Unpredictable external events too can bring abrupt global disruption. For instance the meteorite that made the 12,000 year old, 30 kilometer wide Hiawatha impact crater in Greenland.[8]

Allowing our present knowledge to fade away would be very unwise.

As someone with an interest in several aspects of history, particularly the history of electronics, I often find myself seeking old books and manuals. Before the Internet and with limited personal means this was usually a futile exercise, but with resources like ebay and abebooks, web search, the many online archives of digitized works, and forums of others with similar interests, the situation has greatly changed.

These days one typically has a choice between obtaining an original paper copy of old manuals, or downloading (often for free) an electronic copy of the manual. Personally I find the physical copies vastly more readable, practical, convenient and satisfying than electronic copies, so I generally buy physical copies when available and affordable. But of course this won't be possible for ever, as these things age and become more rare. It may take a few centuries before there are no more paper copies, but ultimately the only available forms will be digital - or reproductions from the digital copies. Which means there had better be digital copies, or the knowledge will be lost like Damascus Steel, or the skills that made the Antikythera Mechanism.

Fortunately there are many who recognise the problem we have with technological data loss, and make efforts to rectify the situation. They scan old technical documents, and upload them to the many online archives.[9]

Some of these are private individuals, sometimes forming groups to work together preserving such history. Others are for-profit operations, demanding payment for access to their digital copies. There are a few good examples of corporations taking the trouble to preserve public archives of their own past technologies, but mostly businesses do not care about such things since the effort does not mesh with the corporate first commandment: to maximize profit above all else. Or they exhibit undesirable behaviors such as 'for legal reasons' mass culling all circuit schematics from their archive of digitized manuals, or deliberately ensuring the digitizing resolution is too poor to be usable. (Their rationale is a lie, it's actually about ensuring old equipment can't be maintained, thus slightly increasing sales of new equipment.)

As for governments, they seem to be too busy archiving and crimethink-analyzing our emails and cross-referencing a billion Twitter messages to give any thought to the preservation of humankind's technical and literary cultural heritage. The deliberate destruction of the engineering plans for the Saturn V moon rocket is just one example of many, that illustrate typical government thinking.

%%%

Judging by the large numbers of quite poor quality document scans online, there does seem to be a need for a 'how to' like this. This work is the result of my own learning experiences, involving %%% plenty of mistakes. Hopefully it will help you avoid repeating them. Critiques and suggestions for improvement of this work are welcome.

Perfectly good LCD monitors are also free, since many people chase the 'bigger is better' mirage, and discard their old one — from a couple of years ago. The screen should be anything like 1280 x 1024 or better, have a good colour accuracy, but most importantly the screen should be clean! Put a pure white background up, or maximize a text editor, and check there are no blemishes, specks or dud pixels on the screen. A speck on the screen and a speck in the document look the same, until you pan the document image. You are going to be looking at a lot of spaces between text while removing scanning imperfections, and having a spotless screen will save you a lot of trouble. Other reasons this is important for your final document files will become evident later.

You can clean screens with typical window spray cleaners, keeping the spray very light and not letting any run under the edge molding. (If it does, the fluid can corrode the metal frame of the LCD, and at worst damage the electronics.) Placing the screen flat while cleaning can help avoid that. Wipe dry with a soft tissue while looking at slant reflection in the surface, then the white background again. Never rub anything across the screen that could leave scratches. The surface is a relatively tough plastic film, not glass and not as hard as glass.

If you can't produce raw capture images preserving every significant detail of the physical document (including blank paper areas being blank in the captured image, and photos retaining all original detail), then the capture device is not good enough. You may decide that some levels of detail can be dropped from the final online document copy, but that must be your aesthetic decision, not the result of hardware shortcomings. If you're being forced to accept quality loss due to equipment limitations, then it's better not to waste your time doing the scanning work at all. Not until you get better equipment.

Generally though, quality is not a problem even with low cost scanners today. The scanner does not have to be anything great, so long as it produces reasonable quality images — and most do. If it can achieve up to 600 dpi (dots per inch), has color and gray-scale capability, can capture a reasonably linear grayscale, and has facility in the software to optimize capture response to suit the actual document tonal quality, it will do the job for most books and technical documents. Note that this completely excludes all 'FAX mode' black and white scanners.

The maximum page size you'll need it to handle depends loosely on the kinds of documents you expect to scan. If you will only need to handle a limited number of sheets bigger than the scanner bed, then stitching sections together in post-processing won't kill you, so a small (cheap) scanner can be adequate. If you want to attempt manuals with hundreds of large fold-out schematics, then you're going to need something more up-market.

Ergonomic issues like whether there is auto sheet feeding, how many keystrokes or mouse clicks required per page scanned, scanning carriage speed at chosen resolution, etc, translate to how much time will be required, not the quality of the end result. Whether time is a primary issue to you, depends on your situation. For some it's not particularly important, for others it's crucial. That choice is yours. With bound volumes you're going to be manually handling each page scan anyway, so autofeed is irrelevant. Also the page setup takes long enough that a few more keystrokes aren't going to make much difference either.

Another factor is that page autofeed mechanisms bring some small but real risk of missfeeds that may damage the original document. With consequences dependent on the rarity and value of the document. Weighing this risk is your responsibility.

I currently use an old USB Canon Lide 20, that I literally found among some tossed out junk. Downloaded drivers from the net, and it worked fine. It has an A4 sized bed, so with foldout schematics I have to scan them in sections and stitch. The stitching is typically only a small portion of the post-processing work required. Most of that post-processing would still be required no matter how expensive a scanner I used.

If you have a high end digital camera, for some document types it can be used instead of a scanner. The criteria is whether the images must be exactly linear and consistently, acurately scaled across the page, or not. For some things (for instance, engineering drawings, and anything you are going to try stitching together) exact rectilinearity is indispensible, while other times (eg text pages) it generally doesn't matter. With cameras you'll get some perspective scaling variations and barrel distorion no matter what, while scanners produce a reasonably accurate rectangular scaling grid across the image.

You'd need a setup with a camera stand, diffuse side lighting and black shrouding, so a sheet of glass can be placed on the surface to hold it flat, without introducing reflections. I've so far only tried this with a large schematic foldout, but found my camera resolution was inadequate for that detail level. I'll be trying it again for the chore of scanning one particular large old book. It should solve the 'thick spine can't spread flat' problem, which is an intrisic obstacle with cheap flatbed scanners. There are scanners where the imaging bed extends right to one edge, but they are expensive. Google 'book edge scanner'.

Another thing I've yet to try is a vacuum hold-down system, for forcing documents with crinkles and folds to lay very flat. This should be workable for both scanners and camera setups.

The reason the scanner is not the most critical element in the process, is that no matter how good the scan quality, you are still going to have to do post-processing of the images. During post-processing many imperfections in the images can be corrected — it takes a very bad scanner to produce images so poor they can't be used. Also a large reduction in the final file sizes can be achieved by optimizing the images to remove 'noise' in the data, that contributes nothing to the visual quality or historical accuracy.

Of course, where the line lies between unwanted noise, and document blemishes of significance to its character and history, is up to you.

Overall, your post-processing skill and the document encapsulation stage will have the most significant effects on quality, utility and compactness of the final digital document.

Fundamentally the results depend on your skills, not the tools. That shouldn't be any surprise.

The choice of scanner vs camera is complex, and depends on the needs of your anticipated scanning work, and subtle issues of image quality — that will be discussed in depth later. I'm not going to recommend one or the other. The following table lists general pros and cons of each; some are simple facts, others are matters of opinion.

| Comparison of Flatbed Scanners to Cameras | ||

|---|---|---|

| Scanner | Camera | |

| Resolution | Specified per inch/cm of the scanner face. Typically scanning is done at 300 to 600 dots per inch (dpi) but most scanners can go up to over a thousand dpi. At say 1200 dpi, an A4 sheet (8.5" x 11.75") has a scan image size of 10,200 x 14,100 pixels. That's about 144 megapixels. And actually while scanning it's usual to overscan, with an extra border around the page. So the total resolution will be higher. |

Specified as X by Y pixels for the overall image. This has to include any border allowed around the page, so the page resolution will be a bit lower. Taking the Canon EOS range as typical, image resolutions range from 10 to 50 megapixels. For an EOS 5DS with 51 megapixels, max resolution is 8688 x 5792. (Aspect ratio 1.5:1) For an A4 page (aspect ratio 1.38:1) that gives 681 dpi max. With some border it will be a bit less. This is just enough to adequately capture printed images with tonal screening. |

| File size | Can be configured for low file size, but generally the files are large; 50MB is common for a page. Scanner files are usually intended only for post-processing work, with the final product being noise-reduced, scaled to a much lower resolution, then compressed in a non-lossy format, and probably bundled into a single document wrapper file. |

Cameras are designed to produce manageable file sizes as a primary requirement, so compression is enabled by default. Unless the user selects an uncompressed format, files are generally a few MB or less. Even with uncompressed formats, because of the lower overall image resolution, file sizes will be smaller than typical scanner images. Camera image files are usually expected to be archived as-is, and distributed as-is or at reduced resolution. For document capture they will undergo similar post processing and bundling to scanner images. |

| Image coding format | Scanner utilities generally offer a wide range of image coding, both lossy and non-lossy. JPG and PNG being typically available. For document capture non-lossy PNG is highly prefered. Never use a lossy format (JPG) for original scan files. | Cameras tend to have fewer file type options. Some may lack any non-lossy format. JPG is always present, and with some cameras non-lossy forms such as RAW. I've never seen a camera with PNG as an option. |

| Scale linearity | Intrinsically linear across the entire scan. At least it should be. Some poor quality scanners or mechanical faults can introduce non-linearity. |

Varies across the document due to perspective and sphericity. Can be minimzed via camera alignment, focal distance and lens selection. |

| Stitchability | Manual PS image stitching possible due to consistent scale, linearity and illumination. | Perspective and illumination variations require software tools to stitch images. Without custom utilities it's generally impossible to do adequately. Even with the best tools, final product linearity won't be quite true to the original. |

| Illumination | Intrinsically highly uniform. | Achieving uniformity requires a careful lighting setup, with significant extra cost. |

| Repeatability | Very high due to absense of external lighting variations, and saved configuration files. | Difficult to achieve without a complex physical setup and care with camera settings. |

| Precise quantization curve control | Yes, always, via config screen. Easily accessible since this is a primary requirement of scanners. | Depends on the camera. Typically not, or if present will require digging down through setup menus since this is not a feature commonly required for general camera use. |

| Config files | Yes, always. | Depends on the camera. Typically not. |

| Intrinsic defects | Linear streaks in the direction of carriage travel, due to poor calibration or dirt on the sensor. Smudges, dirt, hair, scratches on the glass repeat in each image. Focus and luminance defects wherever the paper was not flat against the glass. |

Perspective and sphericity linearity defects. Luminance variation across the page. |

| Reflections | Never. | When a glass sheet is used to flatten the document, suppressing reflections of nearby environment requires active measures such as dark drapes, lighting frames, darkroom, etc. |

| Page back printing bleed-through | Can be a noticable problem due to high intrinsic capture sensitivity. Requires measures such as black felt backing to suppress. | Tends not to be noticable, partly due to lower overall capture sensitivity. |

| Print screening moire pattern control | Since scanner resolution can be set to near the screening size, moire patterns have to be deliberately avoided by selecting adequately higher resolution. | Not generally a problem, since camera image resolution when viewing full pages with printed screening is typically too low to generate moire effects. However this means the screening pattern is lost, and cannot be precisely dealt with in post processing. |

| Page setup effort | Depends on the document. Stiff-spined books can be impossible or difficult. Single sheets are easy. Sheets larger or much smaller than the scanner face can be a pain to align. Some scanners can auto-feed pages. | Depends on the setup. The primary attraction of camera-based systems is the feasibility of capturing thick-spined books, using frames to hold the book partially open, and/or capture two pages at once with minimal page turning effort and little manipulation of the whole book. |

| Capture speed | Not instant. Speed depends on the scanner and selected resolution. It can be quite slow: up to several minutes per scan for large pages at high resolution. | Instant. But there's still the issue of transfering images to the computer. This can be rapid too if the camera is directly connected by wire or wifi. |

| Document flatness | Must be flat against the glass. With some documents this can be difficult to achieve. Folds, book spine bends, and edge lift due to sheets larger than the scanner face bezel aperture are common problems. | Not critical due to the camera being distant from the document surface. Usually document flatness irregularities are too small to be significant within the focal depth of the camera. |

| Space requirements | With scanners now being so small, setup space requirements are minimal. A small scanner can be easily stored away when not in use. Larger ones can require dedicated floorspace. | The setup required to achieve good results with cameras can be quite bulky, and tends to require permanent allocation of space or even a room. |

It's necesary to use the hardware drivers, but in my experience most of the extra bundled software tends to be rubbish and is best ignored. If you want to save a lot of time required to verify that they are rubbish, don't even install them. Photo album 'organizers' will try to hide the underlying filesystem (it's a Microsoft/Windows corporate paradigm that users can't manage/organize their own files, so the OS & Apps should 'relieve them of that responsibility'.) OCR-ing the document would be nice, if there was a file format for containing both the original imagery and the OCR text in an itegrated manner. But there isn't. As for PDF converters — well I'll discuss PDF later.

Most importantly though, bundled Apps tend to be cheap/crippleware versions; often wanting you to pay to upgrade to full versions, still not as capable as easily available better utilities, and in any case requiring a fair amount of learning curve effort to use at all. Then if you change your scanner you may find those Apps don't work any more, and you have to learn the ones bundled with the new scanner.

It's far better to find a set of good independent (preferably Freeware and Portable) utilities that can do the things you need, learn how to use them well, and stick with them.

Regarding the scanner driver software that you will need to use, here's a list of a few esssential features:

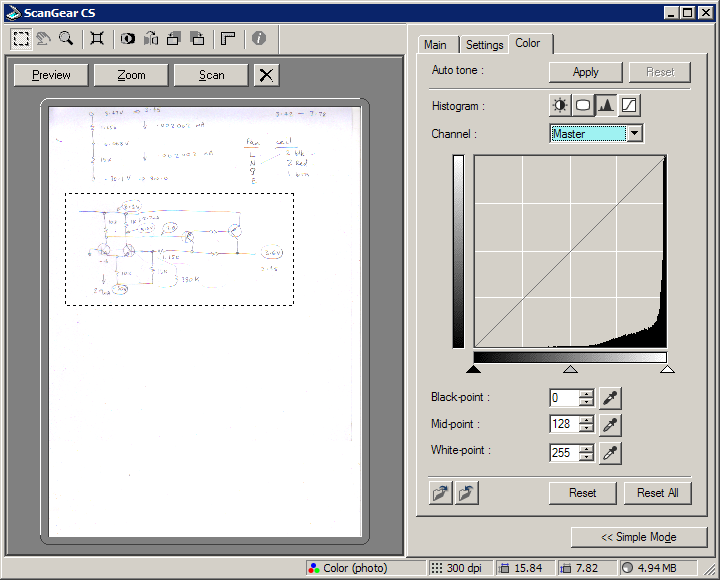

At left there's a lo-res scan preview, with dashed outline for the area to scan at selected resolution. The dashed outline can be moved and resized with the mouse. At right the response curve can be adjusted via mouse drags or numeric entry. The graph shows statistical weights of luminance values in the scanned image. The preview image changes on screen interactively with adjustment of the curve.

Note that example shows the tool as it initially appears, unadjusted. The fine straight diagonal line and the numbers describing its shape and position are not how they would be after user adjustment for that preview image. We'll get to that later. For now the important point is that once adjusted, those values should normally remain the same for all pages of a document being scanned. If these values change between pages the appearance of the scanned pages will be inconsistent.

That's why facilities to save and restore the scanner profile are here — because this is the most important element of the scanner settings. More important even than resolution. Settings for colour mode and resolution are in other tabs, but they are saved together with these values.

Here the save/load action is represented by the small folder icons, and it will be different in every scanner's software. But it's crucial that the ability exists. Also that the saved profiles can be saved with user-specified names, and in folder locations specified by the user. You must be able to easily save the profiles in specific folder locations because these files must remain associated with each scanning project.

That's pretty much all that's needed from the scanning software. You'll see many extras like noise reduction, despeckle, and so on, but they aren't essential and you'll have to experimentally verify whether they are useful or a hinderance in practice. Such 'automations' the scanner and its software may offer to do for you, will mostly be limiting your range of choices regarding the final product of your efforts. Once you are familiar with the nuances of post processing you'll generally leave all the scanner frills turned off. That will remain true at least until we have software AI with aesthetic capabilities at human level — and that probably won't end well for anyone.

Yes, I prefer a simple legacy Windows style, as I find flashy visual bling adds nothing but distraction, and can detract from accurate assement of the image on which I'm working.

See also Useful software utilities.

MS Windows is particularly prone to this syndrome, with the Microsoft-recommended program structure involving things like the Windows Registry, splitting of software components across different locations like the System folders, Programs folder tree, user-specific folders, and so on.

However Apple and Linux are also guilty of poor choices in software install structures.

The result of such fragmentation into parts entangled throughout the operating system, is utilities that cannot simply be cloned as a unit (complete with all user configurations) to another machine. When one has very many software tools, each of which took time to learn to use, and may not get used very often, this non-clonability becomes a serious problem when transfering from one machine to another, or keeping multiple machines with identical tool setups. It is simply not feasible to be having to repeatedly go through the install process for long lists of utilities every time one transitions to new computing hardware.

In recent years there has been a developing movement to overcome this revolting, stupid problem, by creating 'portable utilities'. The idea is very simple — the entire concept of 'installing' is discarded, and software is restructured to reside and operate entirely within a single folder. All executables, defaults and user configuration files are kept in that one place, without any external dependencies.

Which is how it should be, and always should have been. It's a fair question to ask why it wasn't. The answer to that one comes down to the usual 'do we assume it was just incompetence? Or was it intentional, for reasons to do with insidious corporate intentions deriving more from ideology than profit motive?' It's your choice what you believe.

A highly desirable consequence of choosing 'portable utilities' is that you can create a folder tree containing all those you use. Also one folder (in that tree) of shortcuts to all the utilities. Then the entire folder tree can be copied to other machines as-is, resulting in all your customary tools being instantly available on the other machine(s). Also already configured just the way you like, since all the config information was present in the tree too.

When selecting software to add to your toolset, it's advisable to first check to see if there is a portable version available. And if not, hassle the authors to make their software portable.

One place to start: http://portableapps.com/

If you find yourself worrying about disk space used for hundreds of very large image files, then just buy an external HD and dedicate that to scan images. It will simplify your backup options anyway.

And for heaven's sake don't even think of using patch compression encodings such as JBIG2.

The original scans should capture even the finest detail on the pages. This includes fully resolving the fine dots of offset screened images. Anything less and you'll never get rid of the resulting moire patterns and other sampling alias effects, no matter what you try in post-processing.

A common conceptual error is the assumption that if a page is black ink on white paper, that means the scan only needs to be half-tone, ie each saved pixel need only be one bit, encoding full black or full white. The result is actually extremely lossy, since all detail of edge curves finer than the pixel matrix is lost. In the worst case there will also be massive levels of noise introduced by scanner software attempting to portray fine shading as dithered patterns of black pixel dots. In reality, so called 'black and white' pages actually require accurate gray-scale scanning at minimum, and perhaps even full colour scanning if there are any kind of historic artefacts on the page worth preserving. For example watermarks and hand written notes.

Yes, the raw scan file sizes will be pretty big; easily 10 to 50 MB per page. Tough. Just remember that the final document file size you achieve will have little relation to these large intermediate file sizes, but the quality will be vastly improved by not discarding resolution until near the end of the process. At that stage any compromise (file size vs document accuracy) can be the result of careful experiments and deliberate aesthetic choice.

All intermediate processing and saves subsequent to scanning must also be in a non-lossy format. Keep the working images in PNG or the native lossless format of your image editing utility. Under no circumstances should you do multiple load-edit-save cycles on one image using the JPG file format, since every time you save an image as JPG more image resolution is lost.

Only the final output may be in a lossy format, and preferably not even then.

Ideally, the only image resolution reduction ever should occur when you deliberately scale the images down to the lower resolution and encoding which you've chosen for the final document.

It may be an obvious thing to say, but do clean the scanner glass carefully before beginning, and regularly through the process. Smudges, spots, hairs, even small specks of dust, all mar the images and can make a lot of extra work for you in post-processing.

For documents with a large number of pages it's helpful to work out a page setup proceedure that is as simple as possible. If you're working with loose sheets, have the in and out stacks oriented to minimize page turns, rotates, etc. The out stack should end up in the correct sort order, not backwards. Avoid having to lean over the scanner face, or you will find hairs in the scans. Ha ha... at least I do. Your body hair shedding rate may vary.

It's helpful to have some aids for achieving uniform alignment and avoiding skew of the pages. But don't stress about this, as you'll find that page geometries will vary enough that you'll probably want to unify the formats during post processing anyway. Also surprisingly often the printing will be slightly skew on the paper, so your efforts to get the paper straight were kind of wasted, and de-skewing in post processing is necessary after all.

Often you will find the document has physical characteristics that make pages resist achieving flatness against the scanner glass. Any areas of the paper that are slightly away from the glass will be out of focus in the scanned image, or cause tonal changes across the page. Folds in the paper, areas at the edge of the scanner glass adjacent to the bezel, and so on, tend to degrade glass contact.

Sometimes there's simply nothing that can be done about it, for instance with thick bound books. In other cases (for eg folds or crinkling of the paper) the answer is more force, in the right places. The flimsy plastic lid and foam-backed sheet of typical scanners tends to be too weak to apply real force to something that needs 'persuading' to go flat. For my setup I have an assortment of metal weights (up to one that is hard to lift one-handed), various sizes and thickness of cardboard rectangles to use as packing shims where needed, sheets of more resilient foam, and some pieces of rigid board to take the place of the scanner lid when it isn't enough.

Ultimately the limit of how much force you can apply is determined by the scanner body and glass. The optical sensor carriage will be very close to the glass, and warping the glass significantly will jam the carriage, or cause focus problems. Obviously dropping a big weight on the glass is to be avoided too. If you are repeating the same motions over and over, perhaps late at night, that's a real risk. (No, I haven't had this accident. Yet.)

If you are working with a scanner with auto-feed, lucky you. As long as it does actually manage to feed and align all the pages correctly. If your system claims to automate the entire scanning and document encapsulation (to PDF) process, then I suggest you take a close look at the quality of the output, for all pages. If you consider it is OK, then you don't need this 'how to'.

%%% modes. B&W, grayscale, color, Image resolution: XY (pixels) and shading resolution: Indexed colour table size, indexed grayscale, and RGB colour. turn off all 'optimizations' - sharpen, moire reduction, dither, etc. capture area - keep it consistent, so images of the same kind are all the same size. OCR profile curves, corrections, etc. Saving them. Ref to workflow... DPI, image size in pixels, and the concept of 'physical size of digital image' For non-lossy formats (PNG, TIFF, etc) Ref to file format characteristics section. EXIF information in camera, scanner and photo-editor util images. How to remove it and why you should.

Here's a more realistic and detailed proceedure:

In the project root, a text file to keep recipee and progress notes. You may find yourself looking at your notes again many years later, so it's a good idea to start the text with a heading stating what exactly you were doing here, and when. Remember you won't remember!

Don't rely on file date attributes - these can get altered during copy operations. Put the date in the file. Personally I put it in many file and folder names as well, like YYYYMMDD_description. That way they sort sequentially.

Give each page type a name. These become headings in the recipee file, where you keep notes on the settings that work (and those which don't.)

If there are many pages of some simple kind like B&W text, but a few containing screened color images, it's worth scanning them at different resolutions & encodings, just for the time saved in faster scans of the text pages at lower-res and grayscale. But to check the results can be manipulated to look the same in the final product you'll need to do some trial scanning and processing experiments.

And yes, this means your Original_scans folder will probably have sub-folders; one for each identified page type that has different scan parameters. At some stage in post processing they'll merge to a common image format, but it may not be for several steps.

Also never forget to view the overall page image, to check that scan quality is consistent across the entire area. No spots where the paper wasn't flat against the glass, bluring or shading the detail?

How about bleed-through of items on the other side of pages? Sometimes this may only occur where there are particularly high contrast blocky elements on the other side, and you don't notice those few failures till much later. Have a look through the document for heavy black-white contrasts, do trial scans of the other side of such pages to check for bleed through.

If necessary, add 'black backing required' to your scan recipee. Note that if you must do it for some, you have to do it for all otherwise the page tones will be inconsistent. The variation in total reflectance of the pages with and without black backing, will shift the tonal histogram of the images — that you configured the scanner to optimise.

If a page type has many pages to scan, then also make sure you can see how to get them all positioned uniformly on the scanner.

Are you going to have to treat odd and even pages differently? For consistent framing it's best to do all of one kind, then the other, and keep the files separate until after you have normalized the text position within the page image area. In which case 'odd' and 'even' pages are actually different page types, and should be treated as such in your recipee.

Because this stage is preliminary, don't hope to use these scans as final copies, to avoid the effort of doing them again in the main scan-marathon of all the pages. For one thing, you won't know what the actual sequence number of these pages will be, until you are doing them all. It's very unlikely to be the literal page numbers! Also when doing the scan-marathon, it's more trouble than it's worth to be constantly checking to see whether each page is one you've done before, and adjusting scanner sequence numbers, etc. So keep these first trial scans in 'experiments' and leave them there.

This is where you develop a sequence of quantified processing steps to achieve the desired quality of resolution in the final document. You must have all that completely worked out before you begin the grunt work, because if part way through the job you decide you don't like something, and change the process, there will probably be a noticeable difference in the end result between pages already done, and subsequent ones.

For documents with many pages, the 'scan them all' stage can require a very large investment of effort. You want to be completely sure you're not going to have to do it over again due to some flaw you didn't notice with the samples.

Make sure you are capturing and can reproduce all the parameters. For instance if you shut down your PC, go away for several days and forget what the settings were, can you resume where you left off and get an identical result? This is why you have a recipee notes file and saved scanner config profiles.

One decision you'll need to make at an early stage based on your initial experiments, is a page resolution for the final product. This is iportant to know, because it also gives you an aspect ratio for the pages. Especially if you are doing some pages in different scan resolutions, you have to be certain you can crop and scale all pages to the same eventual size and aspect ratio & mdash; exactly. Don't just assume you can; actually try it with the utilities you will be using. Some tools don't provide any facility to specify crop sizes exactly in pixels while also positioning the crop frame on the page by eye, so it can be hard to crop a large image exactly so that when scaled down in a later step, it ends up exactly the right X,Y size to match pages from different workflows.

Once you are absolutely sure you're happy with the results, are sure they are repeatable, and have a detailed recipee that can be either automated or at least followed reliably and repeatably by manual process, then it's safe to begin the real work. Just one last thing:

The list is a typical sequence of steps, from scanning to finished document. It's a loose guideline only. Your own proceedure may vary, depending on equipment capabilities, software tools and your objectives. Some of the stage orderings are just preferences, and you may encounter small details and special cases omitted from this walkthrough.

Summary in one line:

Scan,

Backup,

Mode,

De-Skew,

Align,

Crop,

Cleanup,

De-Screen,

Final Scale,

Encode,

Wrap,

Online.

And Relax.

Once you have these original files, never alter them! Make them read only. If you stuff something up during processing, you can go back to these. Having these files means you can put the scanner and the original document safely away, and probably not need to get them out again.

If there are any file naming and sequencing issues you want to fix, you could do it now. If you are 100% sure you won't goof and irretrievably mess things up, thus having to rescan. But why not more safely do it after...

Because it's an automated step with no adjustments, applied to all files, there's no point creating another processing stage folder. Just overwrite these files.

It can be difficult to use the manual rotate facility to get very fine adjustments. An alternative is to use the numeric entry rotate tool. After a little practice you can guess the required decimal rotation number to be right first time.

Do not iteratively perform multiple sequential rotations to approach a correct result. Each rotation transform introduces a small amount of image loss. Instead, try an amount, judge the result, then undo (or reload) and try an adjusted value. The end result should be equivalent to a single cycle load, rotate-once, save.

Note: CS6's Edit ► Transform ► Skew provides a freeform trapiezoid adjustment suited for photographic perspective correction. It's not suitable for correcting scanner skew errors.

CS6 also has File ► Automate ► Crop and Straighten Photos but this only works for images with an identifyable border. White page on white scanner background doesn't work so well. Plus the printed text may be slightly skew on the paper page anyway.

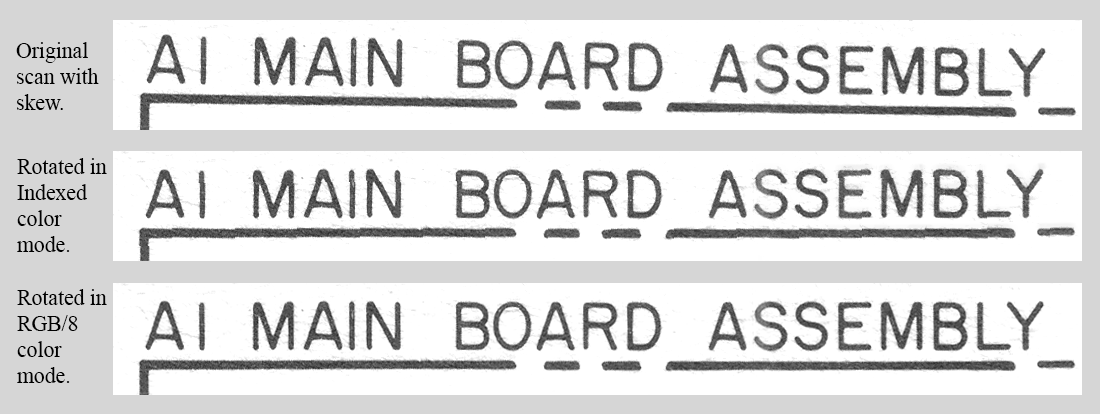

Incidentally, small-angle rotation is one of the operations that subtly but hopelessly screws up with indexed colour files. Try it and see. Zoom in on what were originally straight lines, but become stepped after the rotate. An effect due to the index table not containing ideal colour values to achieve adequate edge shading. It's intrinsic, unavoidable, and unrecoverable.

If you're working on large pages that had to be scanned in parts, this is where you'll be rotating them as required and stitching them together in photoshop. Leave other processing steps till later if there are many to do.

But on a screen, with potentially instant (blink) page switching, you certainly will notice any slight misalignment of page contents relative to each other. The effect can be quite annoying, and usually considered a failure to reproduce the true nature of the original work. The authors didn't expect you to see 'jiggling pages.'

There's only one way to fix this. The pages all have to be 'content aligned' to the limit of human visual perception with blink page changes. That means very, very well aligned.

If readability and minimized file size is a higher priority than perfect reproduction of the original page layout (blank paper included), then at some point you'll be deciding on a suitable croping of the page image files. You can crop quite close to the content. Margins on screen can be done programmatically, or just omitted.

Bear in mind that with run-length encodings like PNG, blank page areas (with perfectly uniform colour, eg full #ffffff white everywhere) compress to almost nothing. So the file size reductions from removing blank margins may not be as much as you'd expect. It's mostly about best use of screen pixels for human readability.

Obviously, to preserve the content position, this crop has to be identical for all pages.

There are various ways to do this. One is to load all pages into photoshop as layers, crop the entire layer stack, then write the individual layers out as pages again.

Another way is via a batch mode operation in Irfanview, applying a fixed crop to all images.

Most image editing tools can automate this one way or another. But this is definitely a case where you want to be sure you have a backup of the prior stage files before committing.

All good image compression schemes (including non-lossy ones like PNG) are able to reduce truly featureless areas of an an image to relatively few bytes. Background areas of a page are usualy the great majority of page area. Hence the nature of the page 'background content' very greatly influences final file size. If the backgrund is actually a uniform colour, file size will be mostly taken up by the actual page content. If the background has texture, that is 'not blank' and requires a lot of bytes to encode. Typically far more than the smaller area of actual page content consumes.

The cleanup stage of post processing involves several quite different tasks, that just happen to be carried out in the same way, and require similar kinds of aesthetic judgement. All of them requiring close, zoomed-in, visual inspection of all areas of the image. This is a part of the process that can take up a great deal of time and effort. Or be quite brief, if the scans were of good quality.

Ideally, during scanning you were able to adjust parameters to get all (or most) of the page background to be white (or some relatively clean colour) and most of the inked areas to be solid black (or whatever ink colours were used.) Remember, with ink-on-paper printing, there are no 'variable ink shades.' There's only ink or no ink. Tonal photos are achieved by 'screening' the ink(s) in regular arrays of variable sized fine dots. At this stage of post-processing those dot arrays should still be intact. We're still not dealing with those yet, other than perhaps to fix any obvious ink smudges.

In the worst case the scans may include a prominent paper texture of some kind, but usually there will be just a few blemishes, spots, speckles, hairs, missing ink, ink blots, etc. This is where you decide what to do about all of them. Were any of them intended by the document author and printer? If not, you should remove them if possible, with the additional incentive that their removal will reduce final file size. Your primary rule should be 'how did the author want this page to appear?'

Remember this? "For all you know, your electronic representation of a rare book or manual you are scanning today may in time become the sole surviving copy of that work. The only known record, for the rest of History."

You're going to be sitting there late at night, grinding through pages in photoshop, and will think "Screw this. I don't care about these specks or that dirt smudge. Who would ever care?"

Well, do you? What if this copy you are constructing ends up on a starship a million lightyears from Earth, the only copy in existence in the entire universe, after the planet and humankind are long gone. Was it important how the author wanted their work to appear?

%%%

Processing at this stage will greatly influence the compressibility of the image files, hence the final document size. Fundamentally it is a tradeoff between retained detail vs file size. Is a type of detail significant, or is it unwanted noise? All retained detail costs bytes. If it isn't significant, remove it. For instance simply removing blank paper texture (that may not even be visible to the eye unless the image saturation is turned way up) and replacing it with a perfectly flat white or tone, can massively reduce total final file size.

See %%%

So there's a need to keep detailed notes in a 'recipee' text file of the exact workflow. This should include both the scan parameters, and the post-processing details. All tool settings, profiles, resolutions, scale factors, crop sizes, etc. If you saved profiles for the scanner, Photoshop or other tools, list the filenames and what they are each for.

Be meticulous with keeping these recipee notes. It sometimes happens that much later you discover flaws in your final document, and you need to redo some stages, possibly even re-scanning original page(s). If you don't have the full recipee, you may not be able to get the exact same page characteristics again.

Generally once you have done sample scans and have a workable scan profile, then scan the entire document in one go. Even if it takes days, don't alter any tool settings.

Even if you line pages up perfectly you'll find that once the image is on screen any slight skew in the original printed page really stands out. So it's best to scan a little oversize, to simplify later de-skew and re-crop processing stages.

Once you have all the pages scanned, in the best quality you can achieve, ensure you have a complete, sequentially numbered set of files, one for each page, in a single folder. These are the raw scan files, with NO post-processing yet.

Make these files read only. The folder name should be something obvious like 'original_scans'. These are your source material, and you won't ever modify them. It's a good idea to keep them permanently, in case you later find errors in your post processing that need fixing. That folder should also contain a text file with the recipee.

Typically your scanner software will be able to automatically number sequences of scans, with you just telling it the number of digits and starting number. Usually you can also include fixed strings in the name too.

But of course, the file sequence numbers won't match the page numbers in the document. Not ever, because what printed work ever starts numbering with 'page 1, front cover'? Also page numbers tend to be a mix of different formats.

Yet while working with a large set of page scan files it's important to be able to find page '173', 'xii', '4.15' or 'C-9' when you need it. While you're working on them the file names should contain BOTH the image sequence number, and the physical page number. They should also contain enough of the document title that they are unique to that project, and you can't accidentally overwrite scan images from some other project if you goof with drag & drop or something.

I typically use filenames like docname_nnn_ppp.png, where:

docname is an abbreviation of the document title. In the final html/zip I may omit this from the image files.

nnn is a sequence number, of however many digits are required for the highest number. They run 001 to whatever. These are in constant format so the images sort correctly in directory listings.

ppp is an exact quote of the actual page number in the document. May be absent, or a description, roman numerals, page numbers, etc.

.png is the filetype suffix, and PNG is a non-lossy format.

Once you've finished processing the page images and are constructing the final document, if the wrapper is html you might want to avoid wasting bytes due to repetive long file names, and reduce the names back to just the plain sequential numerics. Since the names will all occur multiple times in the html, and any more bytes than absolutely needed are a waste.

But do retain the same basic numbering as the original scan and work files, in case you need to fix errors in a few pages.

A useful freeware utility for bulk file renaming is http://www.1-4a.com/rename/

There are several advantages to having a character-based copy of a document, rather than images of it.

In general an image of text will be 10 to 100+ times bigger in filesize than the plain text — depending on the image coding scheme and resolution, and whether the text is plain ASCII/Unicode, or a styled document format.

And so at present a choice must still be made: OCR or images?

Since this article is about preserving rare and historical technical documents, the answer is obvious. One must choose the path that does not result in data loss. This means images must be used, since they capture both the soul and detail of the original work.

When a better document representational data format is developed in future (combining benefits of both searchable text and the accuracy of images), documents saved now as images can be retrofitted to the better format with no further data loss. Retaining the original images plus added searchable encoded text.

On the other hand a document OCR'd and saved as text now, can never be restored to its original appearance. That was permanently lost in the OCR process.

Furthermore it's not just the final image X,Y size and encoding method that determines the file size. One of the most important factors is the nature of the image content itself, and how it has been optimized during post processing. Here the art lies in removing superfluous 'noise' from the hi-res image, even before it is scaled to the final size and compression format. For example a large area of pure white, in which every pixel has the value 0xFFFFFF (or any uniform value), will compress to almost nothing. On the other hand that same area of the image, if if consists of noisy dither near white, even though it may appear on-screen to the eye to be 'pure white', will not compress very well and may require many thousand additional bytes in the final file.

When bundling the whole thing into a single archive file for distribution, be aware that JPG/PNG files are already very close to the maximum theoretical data compression ratio possible, and the archiving compression can't improve significantly on that. In fact the result will likely be larger than the sum of original filesizes, since there are overheads due to inclusion of file indexes, etc.

The RAR-book

link to description, history and how-to image.

Benefits

- Allows large data objects to present immediately as a cover illustration in standard filesystem viewers.

- Not necesary to parse the file beyond the illustration, to see the cover.

- The attached data objects can be of any kind, including unknown formats, and any number.

Problems - only WinRAR seems to enable this. Why?

Why the hell don't other file compression utils do the same 'search for archive header' thing?

7-ZIP does!

why I hate PDF and won't use it. (More Adobe bashing)

Adobe Postscript was nice. A relatively sensible page description language.

PDF is just Postscript wrapped up in a proprietry obfuscation and data-hiding bundle.

It started out proprietry. Now _some_ of the standards are freely available, but the later

'archival PDF' ones require payment to obtain. Not acceptable.

The PDF document readers are always pretty horrible. Many flaws:

- The single page vs continuous scroll mess.

- When saving pics from PDF pages, the resulting file is the image as you see it,

NOT the full resolution original. There should be an option to save at full res,

but there isn't. So image saving is unavoidably lossy. Unacceptable.

Also, strongly suspect it's deliberate.

Can use photoshop to open original images from PDF, but the process is painful.

- The horrible mess usually resulting if you try to ctl-C copy text from PDF.

- Moving to page n in long PDFs is ghastly slow and flakey.

- PDF page numbers almost never match actual page numbers.

- Zoom is f*cked. Does not sensibly maintain focal point.

Any encapsulation method MUST provide easy extraction of the exact image files as

originally bundled. This is why schemes like html+images are better than PDF -

once the document is extracted the original images are right there, in the native file system.

- Usually very poor handling of variable size pages. Eg schematic foldouts.

- Document structure not acessible for modification without special utilities.

%%%

Topics

For each, describe typical sequence of graphics editor actions. Illustrate with commands and before & after pics.

* Descreening. Any of this to be done, MUST be done before any other processing.

* Deskewing pages

* Unifying page content placement (see notes in 'to_add')

* Stitching together scans of large sheets

* Improving saturation (B&W and colour) of ink

* Image noise.

* Cleaning up page background

specks

creases/folds

shadows, eg at spine.

scribbles

area tonal flaws, off-white, etc.

* Repairing print flaws in characters, symbols, etc.

copy/paste identical char/word of same font.

-automation- I wish. Need to find ways to script all these actions.

%%% describe method of creating a selection of all the 'wanted' tones, shrink, expand (to remove isolated specks), inverting (now the selection should include only all 'blank' areas), fill with white, to clean background white.

Need to be able to: 1. select a colour range (eg near-white) 2. Invert selection - selects all 'wrong' colours - which includes lots of other pic elements too. 3. Create new 'Flag' layer, fill selection on that layer with some bright colour eg green, to show up the imperfections. 4. Deselect all. With paintbrush and block select+fill, manually wipe imperfections where desired. But NOT in other areas. PROBLEM: How to be over-painting both the invisible imperfection on the picture layer, PLUS the colour on the flag layer. to add: id="fax-jaggies"

It turns out that while Photoshop does a great job of rotating images in RGB/8 mode, images in Indexed color mode will be slightly but irrevocably stuffed up by rotation. It appears to be related to the colour index table not containing values required to achieve a smoothly shaded line edge, resulting in that nasty 'stepped' effect. Which seriously destroys the visual linearity of line edges and the perceived overall line positioning. As seen in the center example. Notice that the font is corrupted as well.

It's non-recoverable, and carries through scaling operations. So any work you did on the images after de-skewing them is wasted.

The lesson is, always ensure scan images are converted to RGB/8 mode before you do anything else with them.

But no. There are some unsolved issues still. Or at least if solutions do exist they are obscure enough that so far they haven't turned up in my searches. If anyone knows of solutions, please let me know.

These:

(major topic)

Explanation of what print screening is, and why it's used.

(link to my article 'Disconnecting the Dots')

The problem of converting offset printing screened shading, to solid tones.

problems dealing with offset screening. (egs from the HP manual and others)

(detailed description, with lots of pics. Include section critical of Adobe for not implementing

this greatly needed feature in photoshop and requesting that they do so.)

Software tools, and comments

- Ref 'magic filter' in CS6, and its use & shortcomings.

- http://www.descreen.net

- Other descreening utilities.

%%% still to add graphic examples of each case. Also improve the topic/header styling.

Cause: scanning in 2-tone B&W 'fax mode', or over-posterization of tonal scale.

Result: The original appearance of the text and images is irretrievably lost. It's readable (mostly) but looks disgusting. The jaggies never really disappear, even when zoomed out so far the text is barely readable. Even at much higher scan resolution the fundamental problem is that the one-bit encoding results in edge shifting to align with the sampling grid.

People who insist on doing scans like this even after being shown the zoomed-in horror, should be slowly beaten to death with some old 8-bit processor board.

Fix: This will not be fixed merely by increasing the scan resolution. The correct fix is to scan in gray-scale, at suffiently high resolution to resolve all fine edge detail.

When purifying black and white, don't lose the gray-scale shading around line/character edges.

It is important to avoid loss of the tonal shading on the edges of solid color (such as text and lines) that provides sub-pixel accuracy of edge perception to the human eye.

Overdoing it results in 'fax-like' jagged edges of lines, text, etc.

See: Cleaning up tonal flaws in scans

Visually the effect may be below the threshold of noticeability unless the image is magnified. However it does considerably degrade the ability of OCR software to retrieve text accurately from the image. Considering that our purpose is to preserve information accurately, hoping that in future there will be a document format allowing overlaying of searchable text with the original page appearance, degrading the potential for later OCR processing is a serious negative. For this reason JPG compression should be avoided when saving images of text in documents for historical purposes. It may be acceptable to use JPG or other lossy formats to encode non-text images, where there are no sharp edges in the image, or the precision of any such edges is of no importance.

Fix: Don't use JPG for text. It is preferable to process the image to remove original noise and superfluous detail from areas that should be one color, for instance the white paper background (using human perception to judge what it acceptable as a representation of the original document), and subsequently compress the image with a non-lossy algorithm such as PNG. Even non-lossy compression can very efficiently minimize data size required to represent flat-colour areas.

The exceptions to this rule are the small image used for a RAR-book cover, and any intrinsically low-resolution non-textual illustrations in the document. The RAR-book cover is effectively a thumbnail, and can be compressed with a lossy method to give the required visual quality. There should be a full sized copy of the scanned cover inside the archive anyway. With other illustrations it is an aesthetic decision - does zooming in to the result fail to reveal significant detail that was visible in the original?

Cause: Bleedthrough happens due to scanner illumination light penetrating the page, being selectively absorbed by ink on the other side of the paper, and reflecting from the next page or scanner white backing. Especially with very thin paper it can be a serious problem.

This needs to be prevented because it's visually unpleasant, the extra detail in what should be flat page background colour results in pointlessly higher file sizes, and it adds 'noise' that will impede OCR function.

If the bleedthrough occurs in area such as a grayscale photo or coloured background it can be impossible to remove in post processing. Where it occurs together with text on the frontside, the backgound noise can result in distortion of the font outlines during tonal cleanup.

Fix: Insert a matt black sheet behind the page currently being scanned. Black felt or velvet works best.

Note that if you do this for some pages in a document scan project, you should do it for all pages. Since the black backing lowers the total effective reflectivity of the page, and you'll get observable changes between page scans with and without the backing.

If the screening is mixed in with hard-edged elements (text and lines), then it's more difficult.

See: Offset Printed Screening

Once the screening pattern is gone (replaced with smoothly shaded tones), in the final scaling stage when the overall image resolution is lowered to the desired value, no moire patterning will occur.

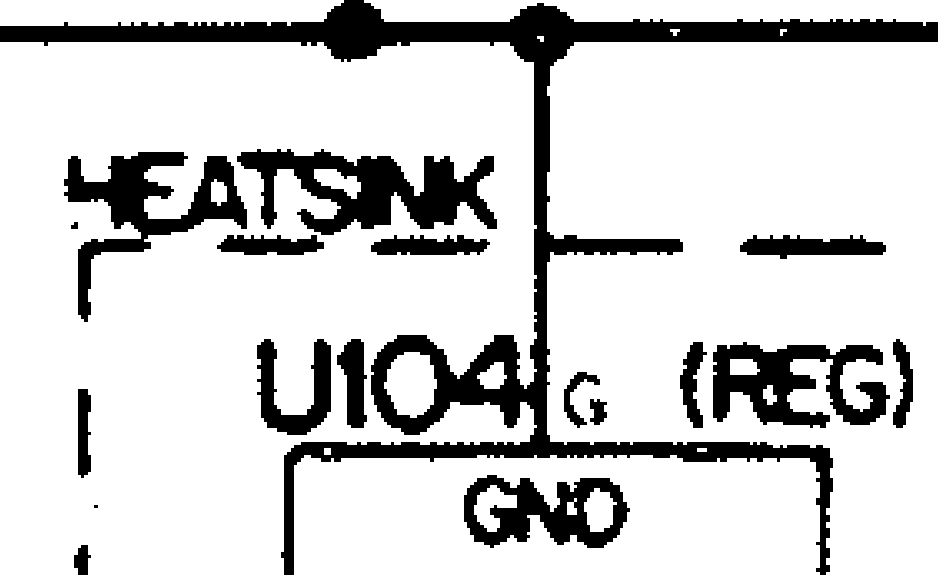

If sections are missing then the entire electronic document is worse than useless, since typically those large pages (schematics, etc) were the crucial core, the essential purpose of the entire document. Without them intact the electronic version is just a waste of storage space, a red herring, a deception since until you try to use it you probably thought you have a usable document, and so didn't keep searching for a complete version.

Fix: Find an original paper copy and redo the scanning right. Scan the entire large sheets, in overlapped pieces as required, at high enough resolution and grayscale to capture all fine texts, do in colour if there are colours, stitch them together in photoshop, get the whites and blacks right, keep some grayscale so lines are still clean, then compress as much as possible without losing any visual detail. The finest text must still be clearly readable.

Yes it's a lot of work. But what's the point of doing it at all, if not properly?

Usually there will be no reason mentioned for the omissions, and even no mention that there were more pages.

The most annoying cases are where front & back covers are omitted, or first few pages where the only text was the manual part number, date of publishing, ISBN, etc, that was so 'unimportant' to the person who scanned it.

Eg: http://oscar.taurus.com/~jeff/2100/2100ref/index.html

It's nice that it's all converted to ASCII text, but what did it LOOK like?

This of course is serious data loss, and if you have no access to the original document it's unrecoverable.

Fix: Don't omit anything. Include all pages, even blanks. When correctly optimised and data compressed, blank pages consume very little data size. If for some reason you must omit blanks, or there were pages missing in the original, make a note of those pages and include it in the etext.